Local AI Stack

Creating My Own Local AI Stack

As someone fascinated by artificial intelligence, I’ve always wanted to have my own local AI stack that I can use for various tasks, from generating text to creating stunning images. In this guide, I’ll walk you through my journey of setting up a local AI stack using Ollama, Msty (or Ollamac), and DiffusionBee (or EasyDiffusion).

What is a Local AI Stack?

A local AI stack refers to a collection of artificial intelligence software and tools that run on my own computer, rather than relying on cloud-based services. This approach gives me more control over my data, better performance, and the ability to experiment with different models and configurations.

Who Can Benefit from a Local AI Stack?

Anyone interested in artificial intelligence, machine learning, or natural language processing can benefit from a local AI stack. This includes:

- Developers and researchers who want to experiment with different models and configurations

- Artists and creatives who want to generate AI-powered art and music

- Students and educators who want to learn about AI and machine learning

- Anyone curious about the potential of AI and wants to explore its capabilities

The Components of My Local AI Stack

To build my local AI stack, I’ve chosen three key components:

- Ollama with LLaMA 3.2 Model: Ollama is an open-source AI framework that allows me to run large language models (LLMs) like LLaMA 3.2 on my local machine. LLaMA 3.2 is a state-of-the-art language model that can generate human-like text, answer questions, and even engage in conversations.

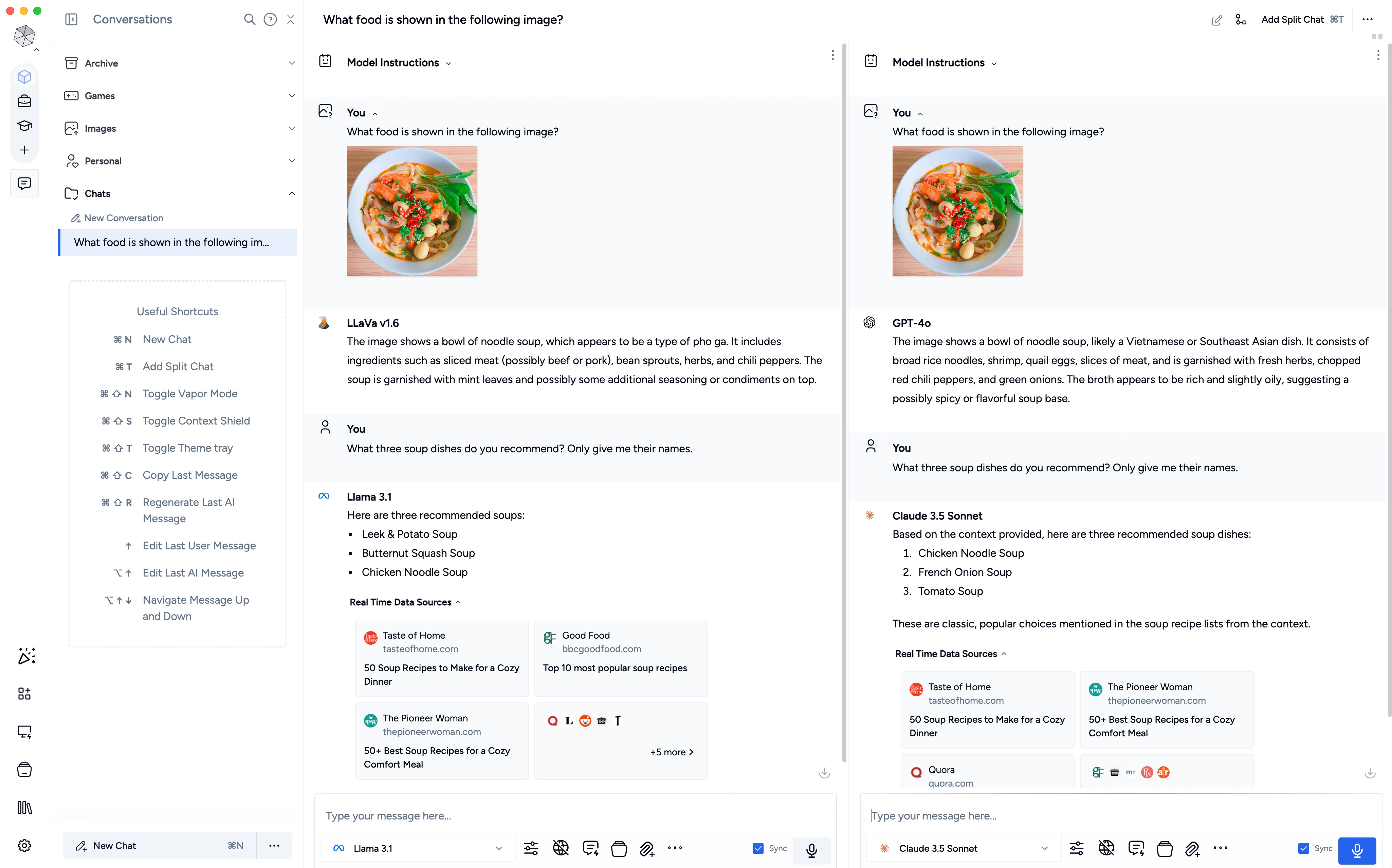

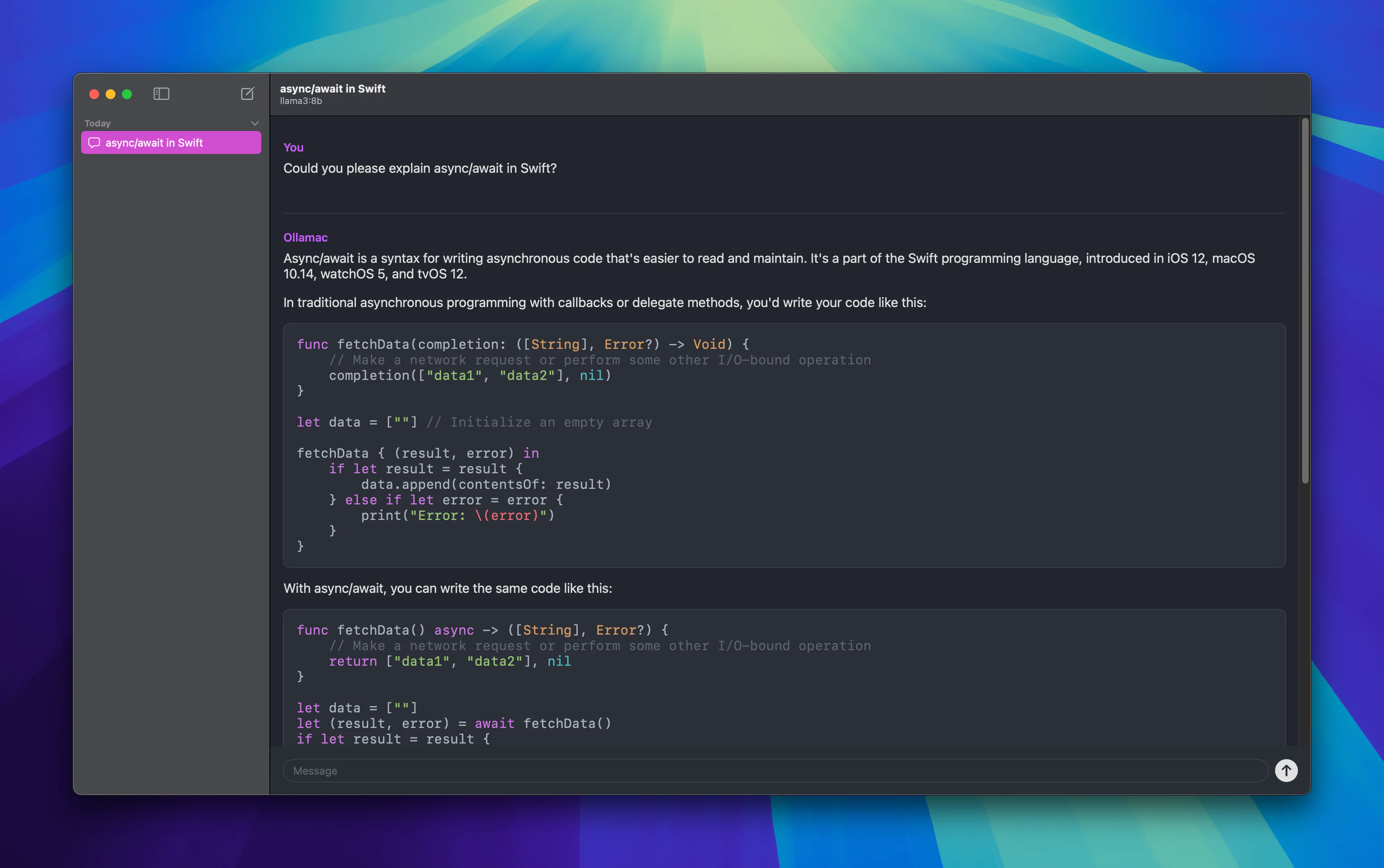

- Msty (Windows) or Ollamac (Mac): Msty (or Ollamac on Mac) is a user-friendly chat interface that allows me to interact with Ollama and the LLaMA 3.2 model. It provides a simple and intuitive way to ask questions, provide input, and receive responses from the AI.

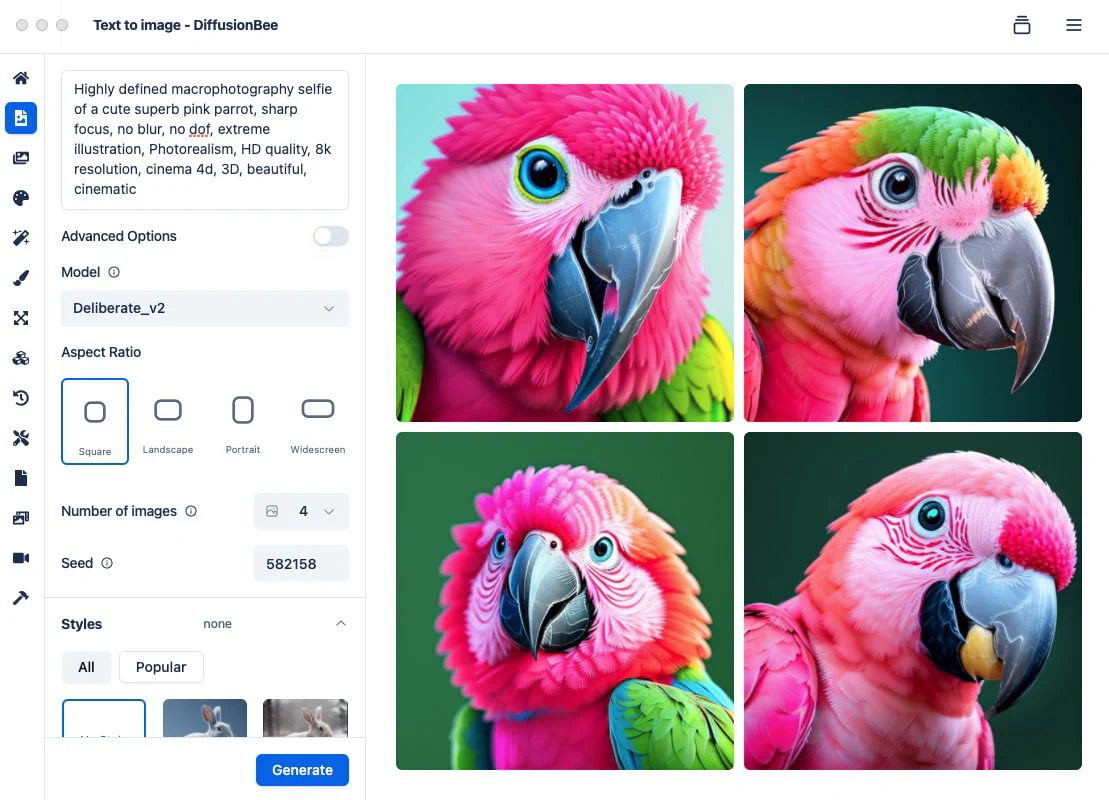

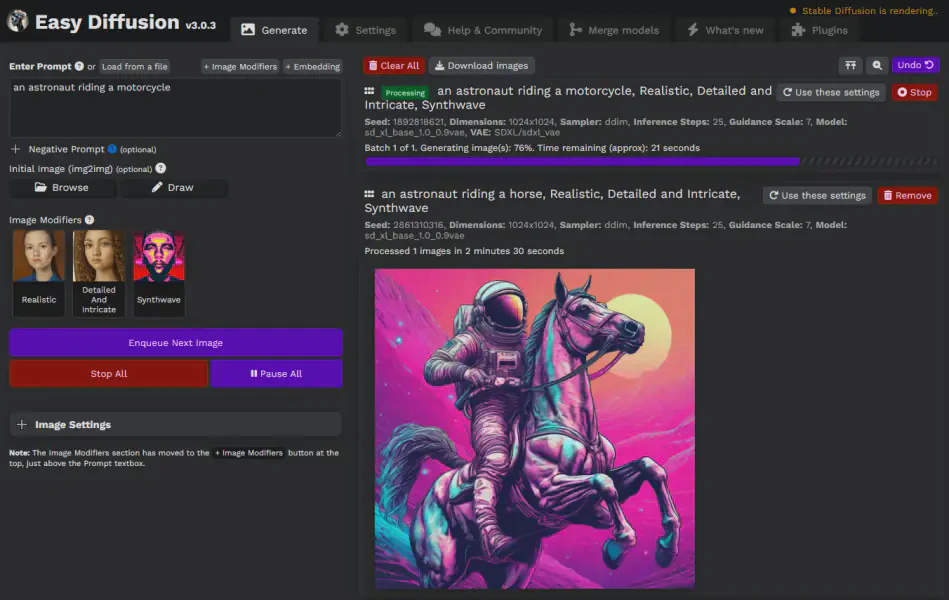

- DiffusionBee (Mac) or EasyDiffusion (Windows): For AI-generated imagery, I’ve chosen DiffusionBee on my Mac (or EasyDiffusion on Windows). These tools use a process called diffusion-based image synthesis to generate stunning images from text prompts.

Why Choose These Components?

I use both MacOS and Windows based computers so I selected these components because they offer a great balance of performance, ease of use, and flexibility on these operating systems. Ollama provides a robust framework for running LLMs, while Msty (or Ollamac) offers a user-friendly interface for interacting with the AI. DiffusionBee (or EasyDiffusion) is an excellent choice for AI-generated imagery, as it produces high-quality images with minimal effort.

How to Set Up My Local AI Stack

Now that I’ve introduced the components, let’s dive into the setup process:

Step 1: Install Ollama and LLaMA 3.2 Model

To install Ollama, Download the Ollama DMG from https://ollama.com/ and then pull the LLaMA 3.2 model using the following command:

ollama pull llama3.2:latestthe LLaMa 3.2 model is now available on your computer and can be run with:

ollama run llama3.2:latestYou can type messages to the LLM to get responses much the same way as you would with a commercial tool like ChatGPT, when you need to quit type /exit

Step 2: Install Msty (Windows) or Ollamac (Mac)

Next, I’ll install Msty (or Ollamac) to interact with Ollama:

- Download the Msty app from https://msty.app/ (or Ollamac from https://github.com/kevinhermawan/Ollamac)

- Configure Msty to connect to Ollama as a local model https://docs.msty.app/features/model-selector#configuring-local-models (Ollamac will default to using a local model provided by Ollama)

Msty

Ollamac

Step 3: Install DiffusionBee (Mac) or EasyDiffusion (Windows)

For AI-generated imagery, I’ll install DiffusionBee (or EasyDiffusion):

- Download DiffusionBee (or EasyDiffusion )

- Follow the installation instructions for my operating system (Mac or Windows)

- Configure DiffusionBee (or EasyDiffusion) to use the desired model and settings

DiffusionBee

EasyDiffusion

Conclusion

Creating my own local AI stack has been an exciting journey, and I’m thrilled to have Ollama, Msty (or Ollamac), and DiffusionBee (or EasyDiffusion) at my fingertips. With this setup, I can explore the possibilities of AI-generated text and imagery, and I’m eager to see what the future holds for this technology. If you’re interested in joining me on this journey, I encourage you to follow this guide and start building your own local AI stack today!